Veilwalker: Realms Divided

One of my clients came to me a while back and asked a simple question: “How can AI help speed up the development of a game world, and create a unique and engaging story?” I was intrigued, so I started thinking about it. One of the concepts that we try to avoid a lot in using generative AI is to reduce what we call “hallucination” – the tendency for a Large Language Model (LLM) to stray from the original prompt input. Counter-intuitively, this is something we need to harness for this project, but give it guard rails so we end up with something coherent.

So I proposed a game – and yes, I proposed an RPGMaker game. And I expect a little bit of flack for that decision, but the project goal is about the story writing, not about the game engine or the technical feats of the game itself, so we’re looking for something that allows for that rapid story development.

What makes a good Generative Story?

We wanted to create an experience for the player, and provide a way to feed our AI models continuously-changing prompts and circumstances – so we settled on an RPG archetype that many of the stores and distributors have dubbed “choices matter” – when in reality, our original inspiration was from homebrew Tabletop RPG games.

A few years ago, the charity we do work with, A Stage Reborn ran a short podcast-format series with a homebrewed DnD 5e game, and I was the DM for that game. I set some guardrails for the storytelling for myself – I would not write the next section of the story for the players until the end of the preceding session. I wanted the players to formulate their own world. And out of that came a few things that have become in-jokes in the ASR community since…

Roz – The Bugbear

Oh man, Roz. The players got a little out of control, so we needed a macguffin so I could at least keep the players out of game-ending danger, so I generated a character – a Bugbear that would quietly push the players in a certain direction. It needed to speak at one point, and the only thing I could do was impersonate Roz from Monsters, Inc, so after that Roz, the Bugbear was born.

Torchcoin

And one of my players wanted to do some counterfeiting for gear, but decided he’d make the face not of the local lord or king, but of the Kenku in the party. The Kenku that could only cast Fireball, and launched it with a frypan…

OK, so what’s the point? Every small choice that the player made had a large impact not just on the story itself – it certainly did there – but also on the community that it touched. This is the kind of magic we needed to capture using generative AI?

Enter: Antihero

So we had a few ideas now. We wanted to tell several stories, that though their interactions would help us better flesh out the world. At the same time, we looked at other media that resonated with people, and this inspiration came from an unlikely source – Breaking Bad, the concept of the antihero. But in our initial discussions, we didn’t want to go the whole “evil for my own reasons” route.

So again, we looked back to TTRPGs, and the alignment system. We wanted to create a few conundrums for the player to explore and overcome:

- Is the path handed to you always the “Correct” path?

- Do the people you come to know and trust have ulterior motives?

- How do you balance the moral and ethical implications of two equally bad decisions?

- How do you justify dooming one world to save another?

Putting ChatGPT “on shrooms”

Before we go any further here – I need to make one point clear, especially when purposely causing generative AI to halucinate: DO NOT DIRECTLY USE THE OUTPUT OF AI IN A PROJECT. Let me say that again: Everything you generate still needs to flow through a person. These are tools in the creative process, and not a substitution for the creative process itself.

OK – that out of the way, I’m going to be a little more vague here, I don’t want to give away what we’re writing, this is something for the player to experience, after all. But the process is as follows:

- Tell the model we’re going to be making a game that focuses on a highly-branched story where the players decisions are meant to have a profound effect on the game world, and the choices aren’t always clear. Guidance given to the player needs to be ambiguous enough that the player can make a “right” or “wrong” decision affecting the endgame.

- Tell the model about the general layout of the world. In this case, we told it we wanted to create at least 5 unique areas, influenced by historical cultures and the disasters that lead to their downfalls. We want to focus on the concepts, and not necessarily the disasters themselves.

- Next, we told it about some of the gameplay mechanics – top down RPG, single player party, limited level system and gear progression, and a focus on coming to understand the world.

- Then – we asked it for the title of the game. And it game up with Veilwalker: Realms Divided. It also came up with some interesting concepts, but ultimately we scrapped most of them. We had enough with the title to institute those guardrails and stop the halucinating.

- Several hours were spent refining the overall story beats, and we started a plain text document to keep track of things we liked, and would feed back parts of what we liked back into the chat to generate better-aligned iterations.

Problems with “memory”

Public ChatGPT 4 is amazing at some things, but when you force it to become creative, things start falling apart at a certain point, and it starts outputting some glaring inconsistencies. We had at this point had it help us create 6 worlds, and given them crises and splitting solutions, but eventually, it would just…regenerate them and stop referencing things that had been agreed on before, even with direct prompting. We hit the wall with a generally-trained model. We needed something that could work on that specific game world only.

Thankfully, this is a similar problem to one I had solved before. I had trained a T5 model to properly ingest, decipher, and output large court case summaries for a legal client to assist in research and identify inconsistencies in legal precedent. This sounds very far-off from what we’re doing, but it’s not.

Explaining “Models”

T5, the model I used for that project, is a model that’s trained for something called “summarization”, allowing it to take input, contextualize it, and provide a short summary. Part of that summarization process is called “tokenization” – or the ability to find key words and phrases in that text input and assign them relevancy scores.

What we did with those summaries was to also take the tokens and store them in a database, with all the other cases we summarized, and then could use a simple SQL query to look for related cases. Those could then be presented to the legal council for further review.

What Veilwalker will need is a Large Language Model (LLM) like ChatGPT. Unfortunately, ChatGPT is proprietary to OpenAI, and training access and models are pretty expensive to customize. Another very competent AI model is LLAMA-2, by Meta. It’s open-source, and with a sufficiently powerful-enough computer and enough time, you can augment its training with your own data – exactly what we need to keep the story of Veilwalker consistent!

Generating the training data

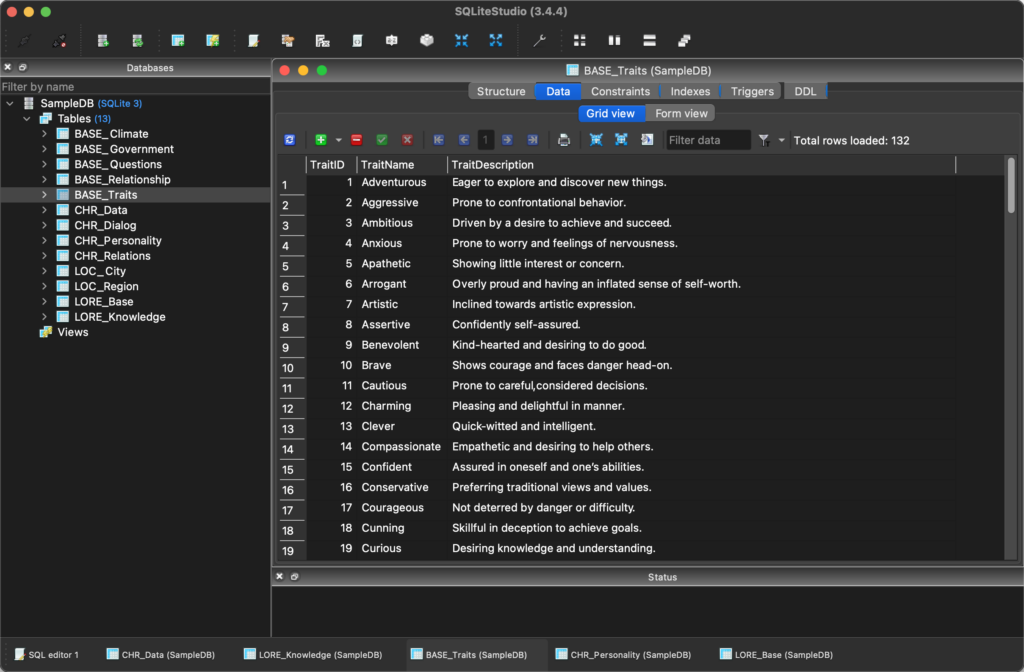

Luckily, training data is fed to the trainer via a simple text file. But Veilwalker is going to generate a lot of text, from Characters, to Locations, to Sub-stories (Quests/Lore), and Dialog. Easy, design a database, I thought. Databases are easy to manage, I thought. Then I query the database and feed it to the training.

And then…

It got a little more complicated than I thought, and there have been more additions to the structure of this database than I’m showing here. This was like v3 iterating on what we needed to create a training set. But, this is going to provide the basis to generate the text files needed to train our new model. So this ended up spawning the creation of another tool we’ll use to keep track of everything we’re writing, rather than a bunch of unorganized Wiki pages and copy-pasting into public models:

Custom tooling

Without getting too far into details, because that will be another blog article, we started work on a CRUD application (Create, Read, Update, Delete) to manage the database in an easy to use way. Our partners helping us with the writing aren’t computer-science folk, so I can’t in good conscience tell them to download a Database editor and write SQL to input data. But, before we start that Project Profile, here’s our very early UI mockup, in Flutter.

That’s the only preview you get for now, read more later when we profile StorySculpt. We have big plans past our initial CRUD release, including the ability to start the model training on your own, right in-app!

But What about the game itself?

Yeah, I know it’s a lot of ideation and AI talk, but that’s where we’re at so far. We have some game mechanics worked out and tech-demo’d in RPGMaker. Thanks to the VisuStella team for a ton of the plugins we’ll need to make that all work, but there’s nothing real to show just yet, but I can throw in some Dall-E renditions of some concept art that were created during the ideation phase:

Yes, these are some vastly different art styles, but our partners at Caturae will be helping us with turning these into the actual art assets for the game – they’re the initial ideas that I’m sure they’ll be able to use to help us create some truly immersive worlds!

Special Thanks (so far!)

- Steve – this project would never have started without your encouragement. Without this as an incentive, StorySculpt wouldn’t be a project, and quite literally, MissionMolder would not exist as a company. These projects need a custodian like MissionMolder to happen.

- Caturae – thank you for partnering with us to help us with art and music assets that will eventually be used. The previews I’ve seen so far have been fantastic, and I’m looking forward to being able to actually integrate them into a project.

- A Stage Reborn – the creative projects we create there served as amazing experience and the springboard for our ideas of what this project can be.

- OpenAI – ChatGPT 4 and DALL-E 2 were instrumental in the initial ideation. Now it’s time to let our imaginations run wild!

- Meta – Thanks for your donation of LLAMA-2 to the community. Our continuation of the project relies on it.

- HuggingFace – Your tools for AI development get more people into custom models than anyone else. The tools there will be a fantastic integration into StorySculpt, which will keep this lore and world organized and consistent!

- VisuStella – the mechanics and concepts for this game could not happen without the amazing work your team does.